With the improvement in cost-performance ratio of imaging equipment and the speed of computer information processing, alongside the perfection of related theories, vision servo technology has met the technical conditions for practical application, and related technical issues have become the current research hotspot.

Most of the information humans acquire from the external world is obtained through the eyes. For centuries, humanity has dreamt of creating intelligent machines, the first function of which is to imitate the human eye, enabling recognition and understanding of the external world.

Numerous structures in the human brain participate in processing visual information, thus effortlessly dealing with many vision-related problems. However, as a process, our understanding of visual cognition remains limited, making the dream of intelligent machines challenging to realize.

With the development of camera technology and the emergence of computer technology, intelligent machines with visual functions began to be manufactured by humans, gradually forming the discipline and industry of machine vision.

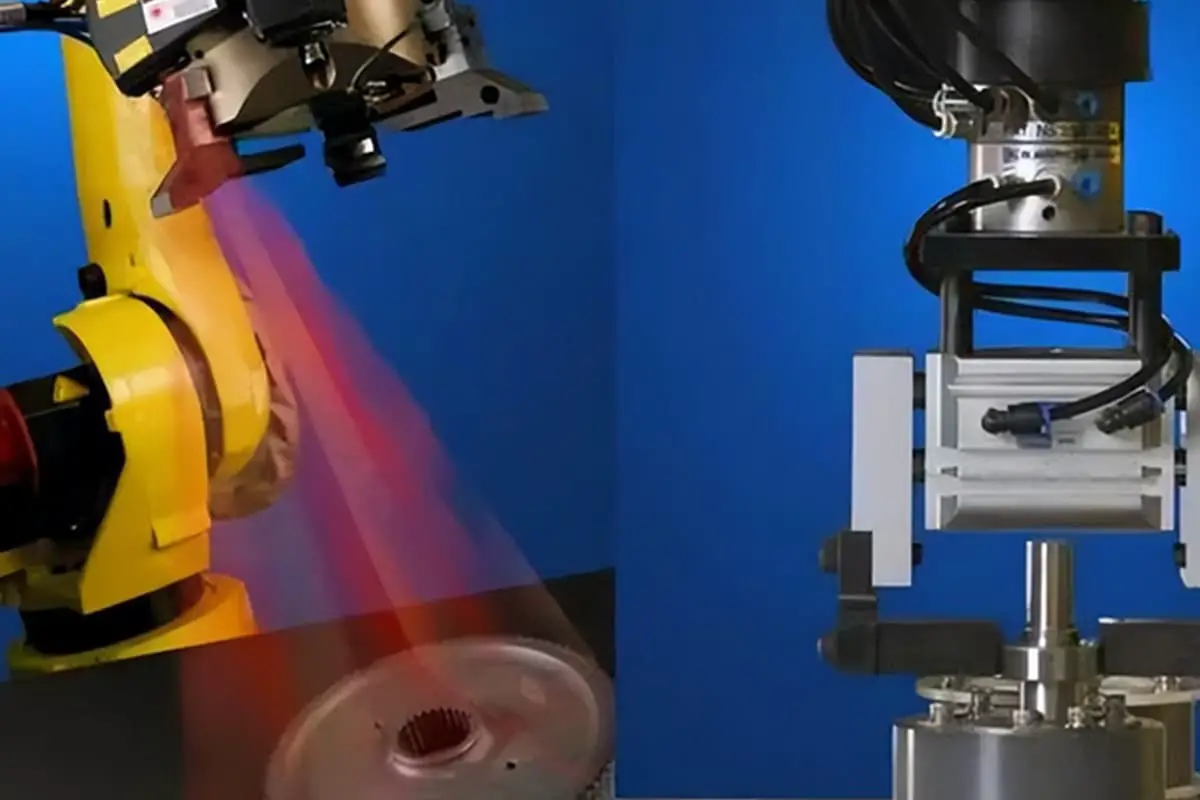

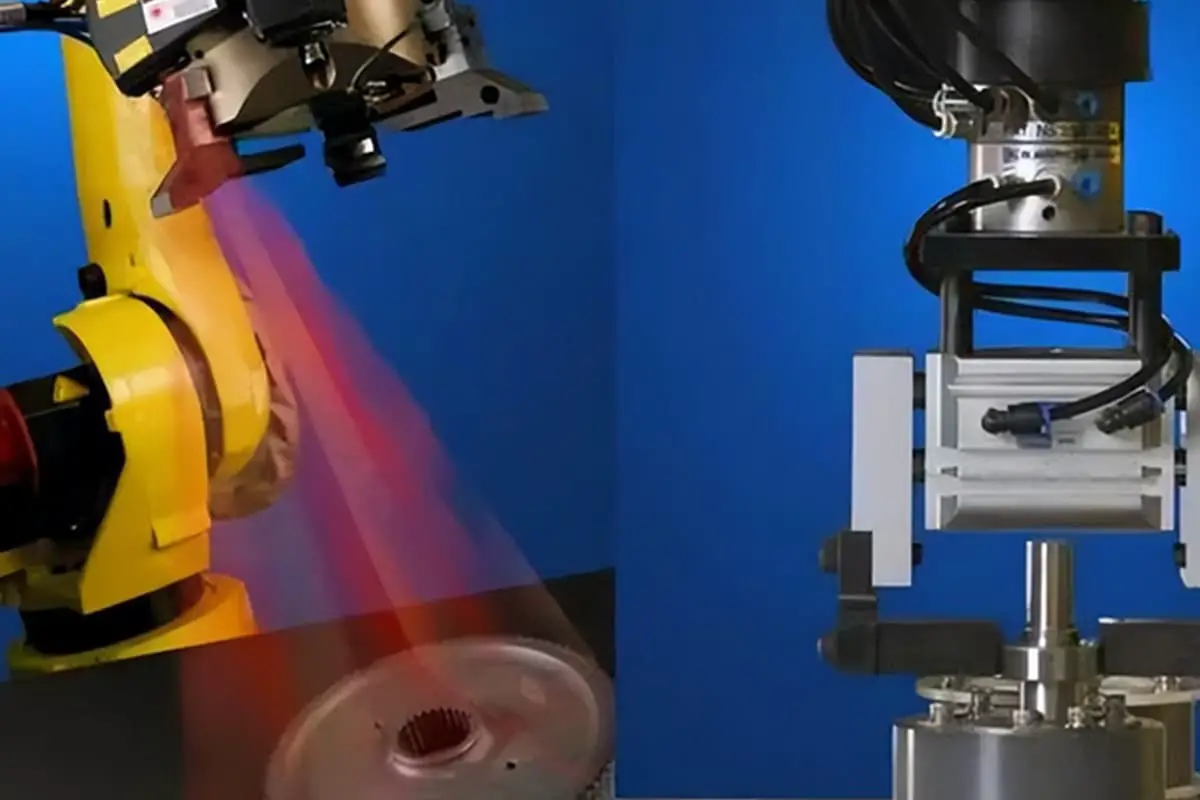

Machine vision, as defined by the Machine Vision Division of the Society of Manufacturing Engineers (SME) and the Automated Imaging Association of the Robotic Industries Association (RIA), is “the automatic reception and processing of an image of a real object through optical devices and non-contact sensors to obtain the required information or to control the movements of a robot.”

As a biomimetic system similar to the human eye, machine vision broadly encompasses the acquisition of real object information through optical devices, and the processing and execution of related information. This includes visible and non-visible vision, and even the acquisition and processing of information inside objects that human vision cannot directly observe.

In the 1960s, due to the advancement of robotics and computer technology, people began to research robots with visual capabilities. However, in these studies, the robot’s vision and movement were, strictly speaking, open-loop.

The robot’s visual system processes the image to determine the position and orientation of the target, calculates the posture of the robot’s motion based on this, and provides this information in a single instance, without further involvement.

In 1973, the concept of visual feedback was introduced when someone applied a visual system to robot control.

Not until 1979 did Hill and Park propose the concept of “visual servoing”. Unlike visual feedback, which only extracts signals from visual information, visual servoing encompasses the entire process from visual signal processing to robot control, offering a more comprehensive reflection of relevant research on robot vision and control.

Since the 1980s, with the advancement of computer technology and camera equipment, technical issues of robotic visual servoing systems have attracted the attention of many researchers. In recent years, significant progress has been made in both the theory and application of robotic visual servoing.

This technology is often a special topic at many academic conferences and has gradually developed into an independent technology spanning robotics, automatic control, and image processing fields.

Presently, robotic vision servo control systems can be categorized in the following ways:

● Based on the number of cameras, they can be divided into monocular vision servo systems, binocular vision servo systems, and multi-eye vision servo systems.

Monocular vision systems can only obtain two-dimensional images and cannot directly obtain the depth information of the target.

Multi-eye vision servo systems can capture images from multiple directions of the target, providing rich information. However, they require large amounts of image data processing and the more cameras involved, the more challenging it is to maintain system stability. Currently, binocular vision is mainly used in vision servo systems.

● Depending on the camera placement, systems can be classified as eye-in-hand systems and fixed camera systems (eye-to-hand or standalone).

In theory, eye-in-hand systems can achieve precise control, but they are sensitive to calibration errors and robot motion errors.

Fixed camera systems are not sensitive to the robot’s kinematic errors, but under the same conditions, the accuracy of the target pose information obtained is not as good as that of the eye-in-hand system, resulting in relatively lower control accuracy.

● Based on the robot’s spatial position or image features, vision servo systems are divided into position-based vision servo systems and image-based vision servo systems.

In position-based vision servo systems, after processing the image, the pose of the target relative to the camera and robot is calculated.

This requires calibration of the camera, target, and robot models, and the calibration accuracy affects control accuracy, which is the difficulty of this method. During control, the required pose change is converted into the angle of robot joint rotation, which is controlled by the joint controller.

In image-based vision servo systems, control error information comes from the difference between the target image features and the desired image features.

For this control method, the key issue is how to establish the image Jacobian matrix that reflects the relationship between image difference changes and the pose velocity changes of the robotic hand; another issue is that the image is two-dimensional, and calculating the image Jacobian matrix requires estimating the target depth (three-dimensional information), which has always been a challenge in computer vision.

Methods for calculating the Jacobian matrix include formula derivation, calibration, estimation, and learning methods, etc. The former can be derived or calibrated based on the model, and the latter can be estimated online. Learning methods mainly use neural network methods.

● For robots using closed-loop joint controllers, vision servo systems are divided into dynamic observe-move systems and direct vision servos.

The former uses robot joint feedback to stabilize the robotic arm, and the image processing module calculates the speed or position increment that the camera should have, which is then fed back to the robot joint controller. The latter has the image processing module directly calculate the control amount of the robot arm’s joint motion.

Visual servoing research has spanned nearly two decades. However, with its multidisciplinary nature, its evolution depends heavily on advances in these diverse fields. There are still many problems within visual servoing research that remain unresolved.

Key future research directions in visual servoing include:

• Rapidly and robustly capturing image features in real-world environments is a critical issue for visual servoing systems.

Given the large amount of information in image processing and the development of programmable device technology, hardware-based general algorithm implementation to accelerate information processing may advance this issue.

• Establishing relevant theories and software suitable for robotic vision systems.

Many current image processing methods in robotic vision servoing systems are not tailored for these systems. If specialized software platforms were available, it could reduce workload and even enhance system performance through hardware visualization information processing.

• Applying various artificial intelligence methods to robotic vision servoing systems.

While neural networks have already been implemented in robotic vision servoing, many intelligent methods have yet to be fully utilized.

Over-reliance on mathematical modelling and computations can lead to excessive computational demands during operation that current computer processing speeds may struggle to meet.

However, humans do not achieve related functions through extensive calculations, suggesting that artificial intelligence methods could reduce mathematical calculations and meet system speed requirements.

• Implementing active vision techniques in robotic vision servoing systems.

Active vision, a hot topic in current computer and machine vision research, allows vision systems to actively perceive their environment and extract needed image features based on set rules. This approach can resolve problems that are typically difficult to address.

• Integrating visual sensors with other external sensors.

To enable robots to perceive their environment more fully, especially to supplement information for robotic vision systems, various sensors could be added to robotic vision systems.

This could address some of the difficulties in robotic vision systems, but the introduction of multiple sensors would necessitate solving information fusion and redundancy issues in robotic vision systems.

In recent years, significant progress has been made in robotic vision servoing technology, with increasing practical applications both domestically and abroad. Many technical challenges are expected to be overcome in near-term research.

In the coming period, robotic vision servoing systems will hold a prominent position in robotic technology, and their industrial applications will continue to expand.

As the founder of MachineMFG, I have dedicated over a decade of my career to the metalworking industry. My extensive experience has allowed me to become an expert in the fields of sheet metal fabrication, machining, mechanical engineering, and machine tools for metals. I am constantly thinking, reading, and writing about these subjects, constantly striving to stay at the forefront of my field. Let my knowledge and expertise be an asset to your business.